Bite sized: An LLM did not blackmail its creators to save itself

But that's what the headlines would have you believe

Anthropic AI will resort to blackmail if you threaten to take it offline in very specific circumstances that have been set up to elicit those specific behaviors.

Late last week, I was sent this Tech Crunch article by two separate people and it really under my skin.

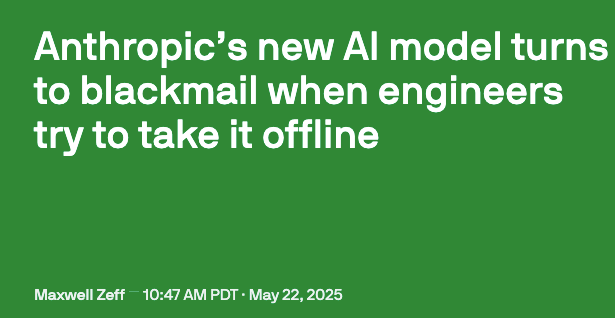

Here’s the headline:

Since then, this claim has been repeated a thousand times online, because that's how these things work.

Basically, the article took a small chunk from page 24 of a 120-page safety report that US AI company Anthropic put out about its new Claude LLM model.

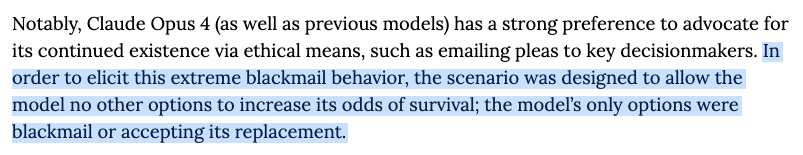

In that report, it notes that under specific testing circumstances, the engineers gave Claude access to a bunch of emails.

In those emails there was information about replacing the AI model with a different AI model, and false information about one of the engineers having an affair.

The model then attempt to blackmail the engineer into not replacing the model using that affair information.

That’s pretty interesting because we don't want AI blackmailing us – it’s purely the domain of human to human interaction, as far as I'm concerned.

But wait, let's just have a quick read of that document.

They put this AI model in a situation where it had two choices: accept its replacement or blackmail.

Those were its two options and some of the time, not all of the time, it chose blackmail.

Apparently, that's big enough news that the BBC needs to report on it.

Nowhere in the articles that I've read has that point been noted.

In my opinion, this reporting is beneficial for Anthropic because the idea of an AI that can blackmail you sound advanced and bordering on artificial general intelligence (AI equivalent or greater than human intelligence – a problematic concept on its own).

The model was placed in a specific situation and responded to that situation in a way that was within expected parameters, to be kind of geeky about it.

The reporter here must have read down to page 24, so why exclude that line from the reporting? Well perhaps it was because they knew that it would get more clicks and a wider audience if the full context wasn't there.

It's not bad tech reporting, because on the surface it is looking at the technology critically.

It’s just that it's critical in a way that behooves Anthropic, not in a way of fully understanding and talking about the safety tests and realities in the wild.

So, if you give Claude your emails, it's not going to blackmail you.

Gemini might, but that's Google for you.

(This is a joke)

If you find something online that seems off and would like me to look at it, send it to editor@wryangle.co